I am a Research Scientist at DeepMind working on Reinforcement Learning.

Previously, I was a Research Scientist leading the learning team at Latent Logic (now part of Waymo) where our team focused on Deep Reinforcement Learning and Learning from Demonstration techniques to generate human-like behaviour that can be applied to data-driven simulators, game engines and robotics.

I received my PhD from the Department of Computing at Imperial College London where I studied Computational Neuroscience and Machine Learning at the Brain and Behaviour Lab. My main research focused on investigating the underlying algorithms employed by the human brain for object representation and inference. I stayed for a Postdoc in the lab to continue my research on investigating the dynamics of uncertainty in sensorimotor perception. I previously obtained my MSc in Artificial Intelligence with distinction at Imperial College London.

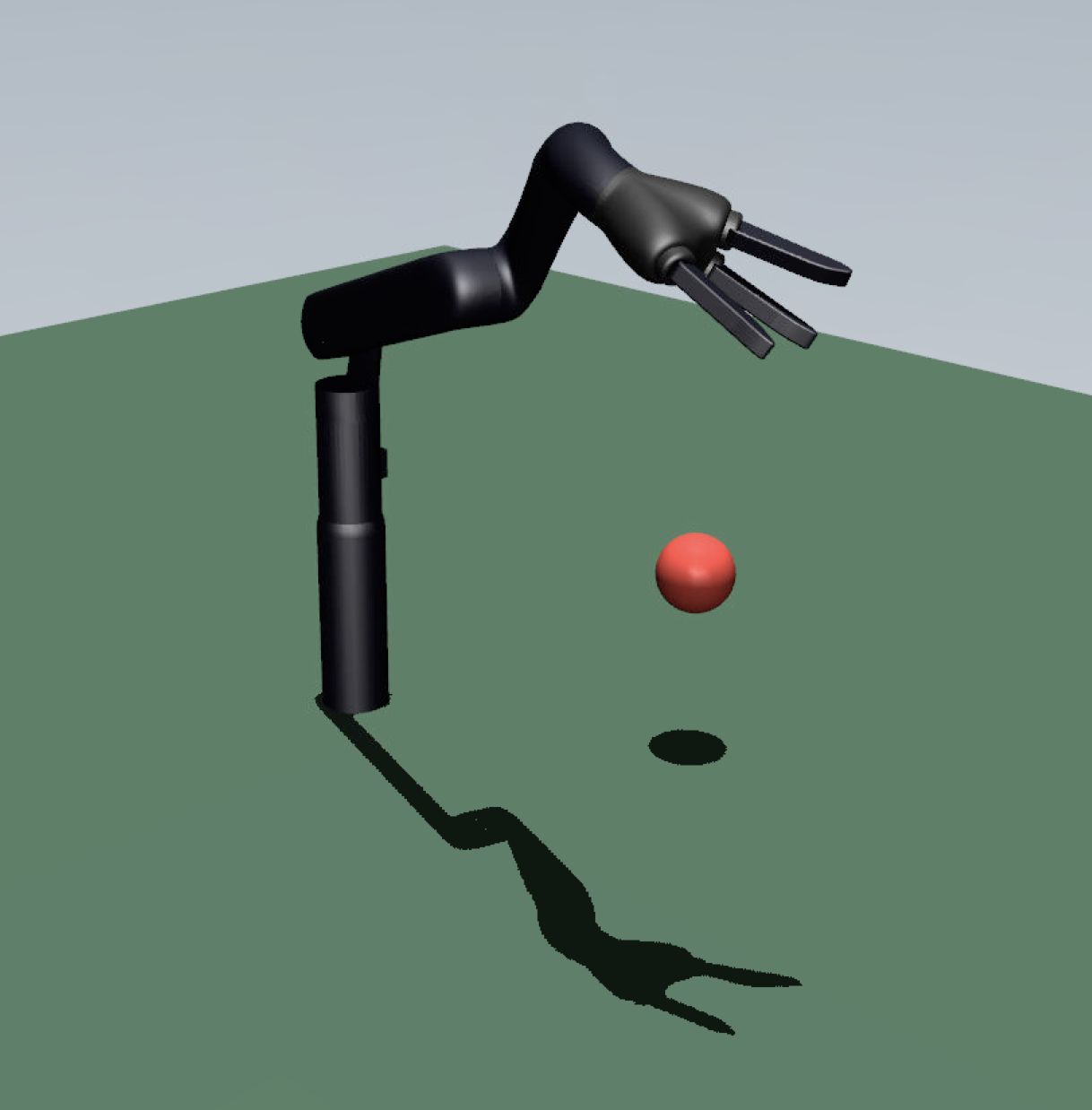

I have also worked on several projects building machine learning solutions for a variety of problems as part of a technology consultancy start-up I co-founded. More recently, as a Visiting Postdoctoral Researcher at the BICV Group at Imperial College London, I worked on transfer learning and Deep Reinforcement Learning applied to control a Jaco robotic arm.

Ph.D. in Computing, 2016

Imperial College London

MSc in Artificial Intelligence with distinction, 2012

Imperial College London

BSc in Information Technology with First Class Honours (1st in class), 2010

Herriot Watt University

Mon, Nov 26, 2018, Distinguished Speakers: Oxford Women in Computer Science

Sat, Jul 28, 2018, Jeju Deep Learning Summer School

Fri, Sep 22, 2017, RE-WORK Deep Learning Summit London

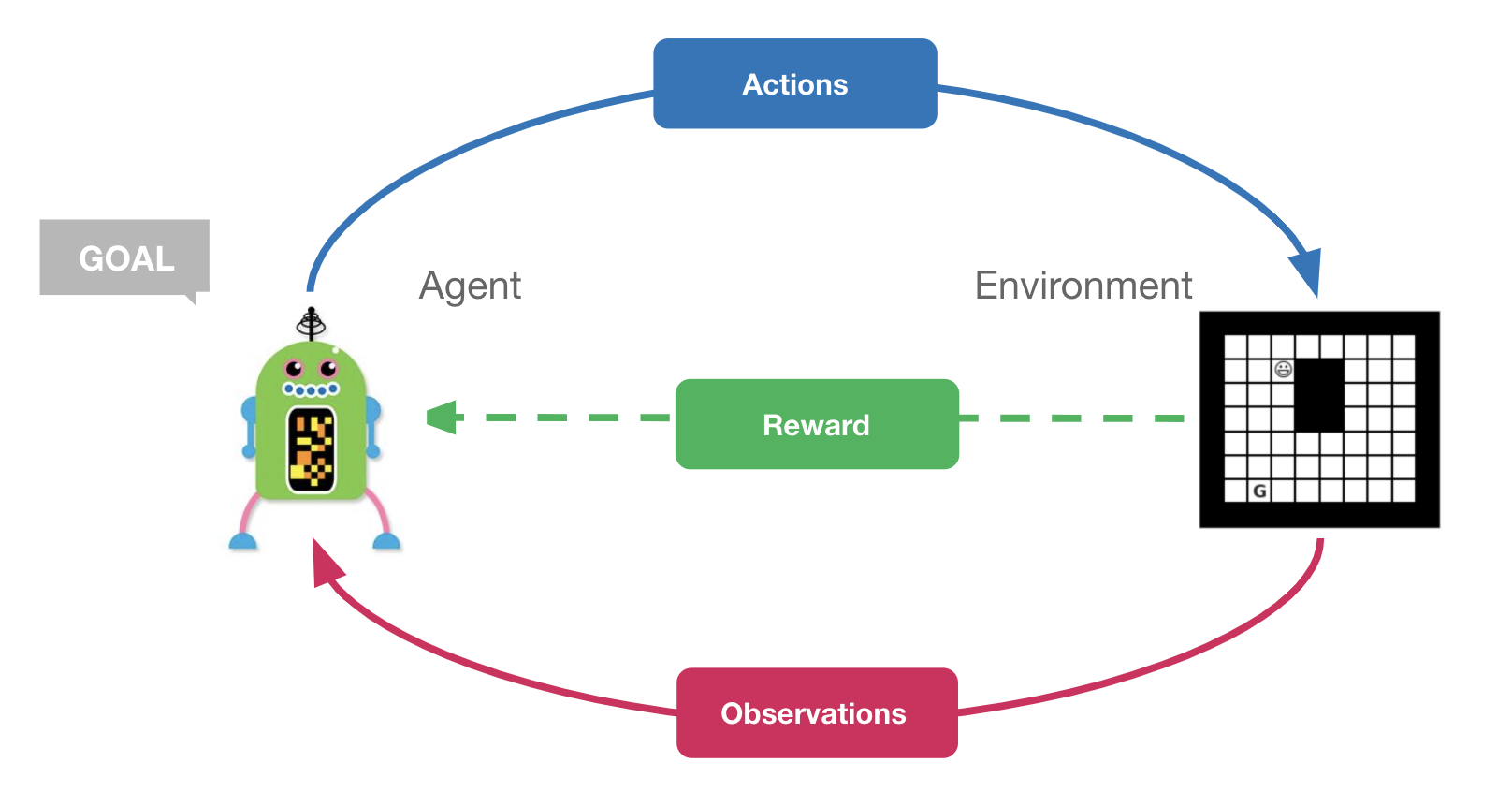

The tutorial covers a number of important reinforcement learning (RL) algorithms, including policy iteration, Q-Learning, and Neural Fitted Q and DQN in JAX. In the first part, we will guide you through the general interaction between RL agents and environments, where the agents ought to take actions in order to maximize returns (i.e. cumulative reward). Next, we will implement Policy Iteration, SARSA, and Q-Learning for a simple tabular environment. The core ideas in the latter will be scaled to more complex MDPs through the use of function approximation. Lastly, we will provide a short introduction to deep reinforcement learning and the DQN algorithm.

My blog post for our recent paper, which presents a novel method for learning from demonstration in the wild that can leverage abundance of freely available videos of natural behaviour. We propose ViBe, a new approach to learning models of behaviour that requires as input only unlabelled raw video data. Our method calibrates the camera, detects relevant objects, tracks them reliably through time, and uses the resulting trajectories to learn policies via imitation. We introduce Horizon GAIL, an extension to GAIL that uses a novel curriculum to help stabilise learning.

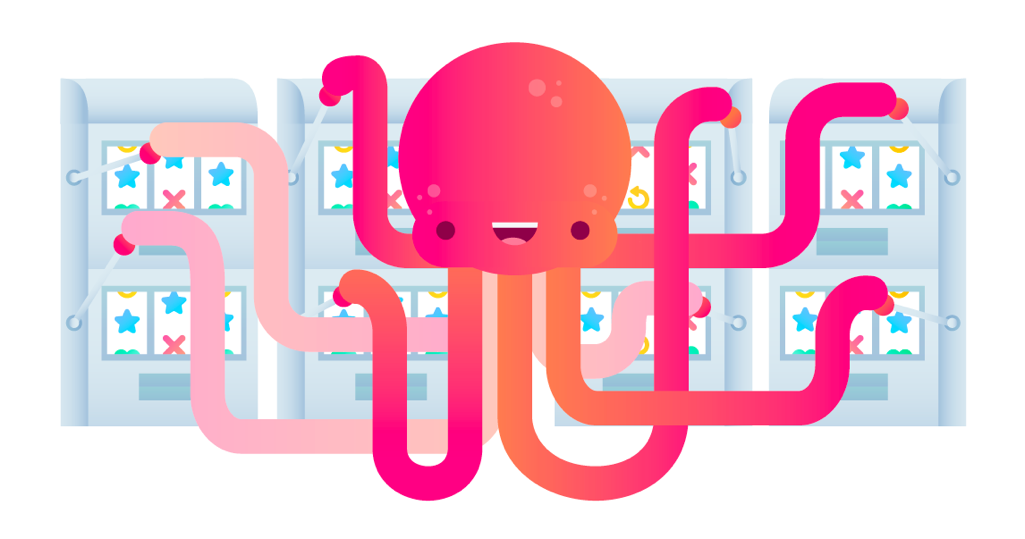

In this project, I set out to train an automatic curriculum generator using a teacher network (Multi-Armed Bandit) which keeps track of the progress of the student network (IMAPALA), and proposes new tasks as a function of how well the student is learning.

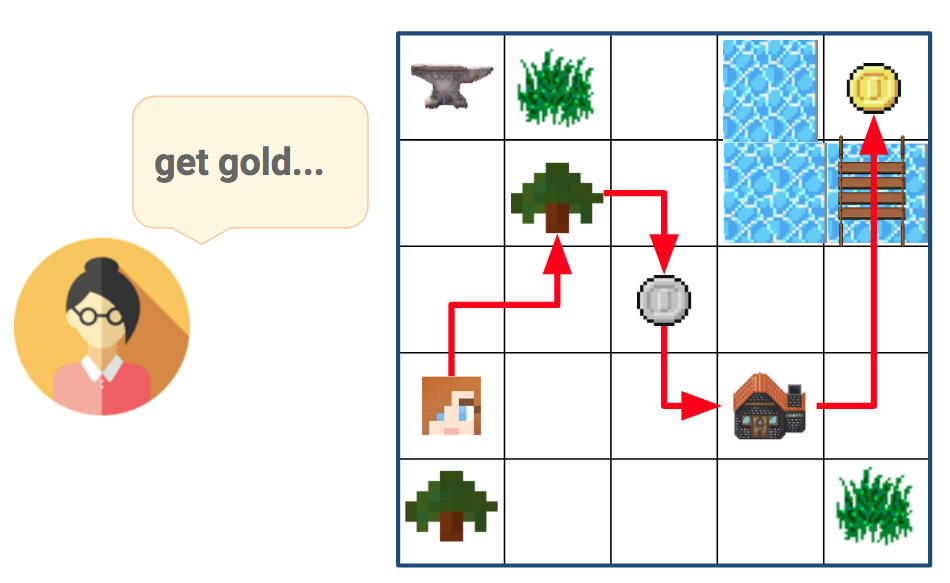

CraftEnv is a 2D crafting environment that supports a fully flexible setup of hierarchical tasks, with sparse rewards, in a fully procedural setting.

Applying end-to-end learning to solve pixel-driven control where learning is accomplished using Asynchronous Advantage Actor-Critic (A3C) method with sparse rewards.

What I’m doing now

(This is a now page!)

I’ll be giving a lecture and a tutorial on Reinforcement Learning in August at MLSS.

Recently we organised a breakout session on Continual Reinforcement Learning at ICML WiML Un-workshop which was very exciting, we will release the session notes on our website.

I really enjoyed participating in the EEML Summer school and doing a tutorial on reinforcement learning, if you want to learn more check out the tutorial here.

Our NeurIPS 2019 Workshop on Biological and Artificial Reinforcement Learning got accepted!

I’m very excited to announce that I have joined Nando de Freitas’ team at DeepMind as a Research Scientist.

I’m very excited to be a mentor for OpenAI’s Scholars in Winter!

I’m really looking forward to attending SOCML 2018 and moderating the session on Curiosity-Driven RL! Find the session notes here! We’re welcoming any feedback you might have as we are preparing a report to release soon!

This past summer, I had the chance to join Jeju DL Camp, held in beautiful Jeju Island in South Korea, and work on what I’m really passionate about: Automated Curriculum Learning for RL! You can find the open-source code and a brief summary here: Automated Curriculum Learning for RL and check the slides for the talk I gave at the TensorFlow Summit in Korea. I’m currently working on a blog post for the project and I’ll present this work at the WiML Workshop in December!

I’m also a mentor for WiML Workshop 2018 and very excited to meet all the amazing students doing incredible research in ML!

I co-organised a Workshop on Learning from Demonstrations for high-level robotic tasks at RSS 2018 in June. I’m currently writing a survey paper on the role of imitation for learning, investigating challenges and exciting future directions.

I’m currently reading the World as Laboratory: Experiments with Mice, Mazes and Men which is a fascinating and at times disturbing account of animal and human experiments carried out during 20th century!